Creating lifelike digital avatars is crucial for immersive gaming experiences, but it has traditionally been a resource-intensive process. At Ubisoft La Forge, we're constantly pushing the boundaries of what's possible in character creation and animation. MoSAR (Monocular Semi-Supervised Model for Avatar Reconstruction), presents a novel approach to generating detailed 3D avatars from a single 2D image. This method aims to significantly improve the efficiency and accessibility of high-quality avatar creation, potentially opening new possibilities for personalized characters in our games. (Monocular Semi-Supervised Model for Avatar Reconstruction), presents a novel approach to generating detailed 3D avatars from a single 2D image. This method aims to significantly improve the efficiency and accessibility of high-quality avatar creation, potentially opening new possibilities for personalized characters in our games.

How video-game avatars are traditionally created

Creating realistic avatars for video game characters has traditionally been a complex and time-consuming process. Typically, it starts with artists sculpting a detailed 3D model using specialized software. This base model is then refined and given texture maps to define skin color, wrinkles, and other surface details.

An alternative approach involves using a light stage to capture an actor's head. The process begins with high-fidelity scanning, followed by computationally intensive data processing that can take hours or even days. Scans often require manual clean-up and refinement by skilled artists. The high-density scan mesh must then be registered to a lower-density mesh topology, optimized for real-time rendering. After adapting the processed scan to fit the game's character model and art style, animators create a facial rig to bring the character to life.

Both methods, while capable of producing high-quality results, are labor-intensive and can be challenging to scale for large numbers of unique characters in expansive game worlds. As a result, there's growing interest in research on building high-quality avatars from a single image, which could potentially offer a more scalable solution.

![[Studio LaForge]MoSAR - Generating relightable character avatars from a single portrait image - P01](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/2KCtVVJrdY79HCjGE3PGWH/c50644fe463a9290abb1d1c382d6c18f/Picture1__1_.jpg)

From Everyday Photos to Lifelike Characters

Building an avatar from a single image is a challenging task due to the inherent limitations of 2D representations. A single photo provides only one perspective, lacking information about the subject's full 3D structure. Additionally, everyday photos can have large variations in lighting, pose, and facial expression. The lack of controlled lighting conditions also makes it difficult to separate intrinsic facial properties (like skin color and texture) from environmental factors, such as the lighting conditions.

To address these challenges, we propose a novel way of training models with a combination of high-quality light stage data (captured under controlled studio conditions) and ordinary everyday images (also known as "in-the-wild" in the literature). This semi-supervised learning technique allows the model to produce highly detailed results while still generalizing well to everyday photos.

Here's an overview of our pipeline:

![[Studio LaForge]MoSAR - Generating relightable character avatars from a single portrait image - P02](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/3PRk4SjQTDNpDHI6Va9ZJK/1935d3236f3acd2caf8e45f95e492b4a/Picture2.png)

Geometry estimation

The first step in the pipeline is estimating the 3D Geometry of the subject's face. MoSAR does this with a parametric representation of the scene:

![[Studio LaForge]MoSAR - Generating relightable character avatars from a single portrait image - P03](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/5WETSW9AYXhdpXydFurDwp/069bd274ac4c437750d62b3de2c84448/Picture3__1_.png)

We train a neural network that estimates the face's shape (identity), face expression, skin color, light and camera parameters. Departing from traditional linear approaches, MoSAR employs a non-linear morphable model, which we show to be more accurate in capturing facial structures.

The estimated scene parameters are used in a differentiable renderer (DR). The renderer allows MoSAR to be trained in a semi-supervised manner. The model learns from both "in-the-wild" images (without 3D ground truth) and high-quality light stage data (which provides ground truth information). This dual approach in training - combining self-supervised learning from in-the-wild images with supervised learning from light stage data - is key to MoSAR's effectiveness. It helps the model generalize well to a wide range of real-world scenarios while maintaining high accuracy in its 3D reconstructions.

Skin reflectance estimation

The next step is to estimate the intrinsic skin reflectance maps. These layered maps are vital for modern rendering engines to achieve realistic results.

The process begins by using the estimated geometry to project the input image onto UV space. Then, a neural network is trained to perform in-painting, filling in the holes corresponding to occluded areas of the face in the original image.

Next, MoSAR normalizes the light information in these textures. This step is necessary to remove harsh shadows and strong lighting effects from the image. By doing so, it prevents baking light information into the intrinsic maps, which would otherwise lead to unrealistic results when the face is rendered under different lighting conditions.

The last step is to train separate networks to estimate intrinsic face attribute maps, including diffuse, specular, ambient occlusion, translucency, and normal maps, all at 4K resolution.

A key innovation in MoSAR is its differentiable shading formulation. This extends the Cook-Torrance BRDF model used with spherical harmonics and introduces a new differentiable formulation that incorporates ambient occlusion and translucency. This formulation is crucial as it allows the model to be trained to predict these complex maps accurately.

This model is also trained in a semi-supervised way. By combining labeled light stage data with unlabeled in-the-wild images, the model learns to generalize well to real-world scenarios while providing high quality texture maps.

![[Studio LaForge]MoSAR - Generating relightable character avatars from a single portrait image - P04](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/57FUQpf3e8HPujdfWJp01l/9309c585b04f25f983fa1f9ee6c49ed4/Picture4.png)

Results: Pushing the Boundaries of Single-Image Avatar Generation

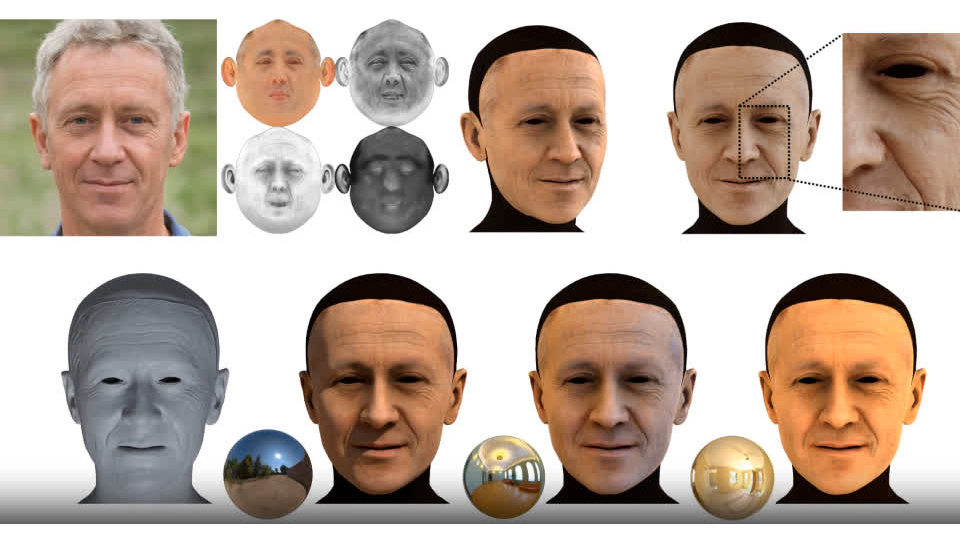

MoSAR can estimate detailed geometry and layered texture maps from a single everyday image. These outputs allow for a highly realistic reconstruction of the subject's face, capable of being rendered under various lighting conditions.

One of MoSAR's standout features is its ability to produce high-quality textures, including pore-level details, at 4K resolution. This allows for the creation of highly detailed avatars that look realistic even when viewed up close in today's graphics-intensive games.

In terms of geometry estimation, MoSAR demonstrates significant improvements over existing methods, particularly those relying on linear morphable models. The non-linear approach used by MoSAR allows it to capture more detailed facial geometry, resulting in a more accurate representation of the subject's features.

![[Studio LaForge]MoSAR - Generating relightable character avatars from a single portrait image - P07](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/25ASOirUOHRo7c5kM4xQAK/2f4dc3bf9ded498127ac31c63b7f18fd/Picture7.jpg)

The reconstructed 3D models show enhanced detail in areas such as the nose, eyes, cheeks, and overall face shape. MoSAR's ability to capture higher-frequency details like wrinkles and folds is particularly noteworthy, providing a level of realism that surpasses many current state-of-the-art methods.

Quantitatively, MoSAR's performance has been validated on the REALY public benchmark, where it achieved second place. This demonstrates that the non-linear morphable model approach is not only visually impressive but also competitive in standardized evaluations of 3D face reconstruction accuracy.

![[Studio LaForge]MoSAR - Generating relightable character avatars from a single portrait image - T01](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/2smIpHogxI1krnXFWmz1hX/e99fb34ab7fc5ceab6030eefd5f8e5f6/Table1.png)

In terms of reflectance estimation, MoSAR's approach yields superior results in relighting scenarios. This improved performance can be attributed to the model's better disentanglement of intrinsic face attributes. By accurately separating and estimating diffuse, specular, ambient occlusion, translucency, and normal maps, MoSAR creates a more faithful representation of the face's reflectance properties.

This enhanced disentanglement allows for more realistic rendering under various lighting conditions. Unlike some other methods that might bake lighting information into the texture maps, MoSAR's approach ensures that the estimated reflectance maps truly represent the intrinsic properties of the face, independent of the original lighting conditions.

As a result, avatars created using MoSAR's reconstructions exhibit more natural and convincing appearances when relit, outperforming other state-of-the-art methods:

Conclusion

MoSAR represents a significant step forward in 3D avatar generation from single images. It can create detailed, relightable avatars from everyday photos. The ability to derive this level of detail and separation of facial attributes from a single image represents a significant advancement in the field of 3D face reconstruction.

Our research demonstrates promising results in generating high-quality geometry and texture maps, including fine details at 4K resolution. By generating separate maps for different facial attributes, we're providing animators and designers with powerful tools for further customization and animation. This technology has the potential to enhance character creation in video games, making it more accessible and efficient.

While there's still work to be done, the results we've achieved with MoSAR are encouraging. At Ubisoft La Forge, we remain committed to exploring innovative solutions that can improve the gaming experience. As we continue to refine this technology, we look forward to seeing how it might contribute to the future of character creation in video games.