One of the most misunderstood principles in artificial intelligence relates to systems that seek to reproduce human intelligence. After all, the very notion of artificial intelligence is vague, as it is a constantly evolving field of study. For Gutenberg, a spellcheck would have seemed like pure witchcraft, whereas today it is regarded as a useful, yet very basic, feature of text editing programs. John McCarthy, the researcher who coined the term “artificial intelligence,” said in 1956, “As soon as it works, no one calls it AI anymore.” As technology evolves, so do our points of reference. Today, the Human Brain Project [i] created by EPFL in Switzerland and the BRAIN (Brain Research through Advancing Innovative Neurotechnologies) Initiative in the U.S. are both striving to simulate and map the human brain.

The myth of humans who can create artificial life is not new to us. It has fuelled many works of art such as Mary Shelley’s Frankenstein and Alex Garland’s Ex Machina. While the singularity remains a myth[ii], we are less aware that some of the anthropomorphic principles that make these works so fascinating and their message so timeless also underpin several key ideas in programming.

In 1948, Alan Turing wrote a paper entitled Intelligent Machinery, which uses the analogy with the human brain as a guiding principle. Turing points out that education through learning is at the basis of all human intelligence and provides several examples of simple machines that can learn through a system of rewards and punishments.

In this article, I’m going to attempt to show how this approach can be extremely useful in the process of creating video games.

Bots. What are they used for?

The word “bot” is a contraction of the word “robot,” invented in 1920 in a stage play [R. U. R. (Rossum’s Universal Robots) by Karel Čapek]. It comes from the Czech word robota, which means “chore.” While robots were originally designed to carry out a physical task in place of a human being, a computer bot is a software “agent” that reproduces human behaviour on a computer server.

Bots have a variety of video game applications, but we can divide them all into three broad categories: automating certain tests, simulating players for online games, and creating autonomous agents to populate virtual worlds.

There are several techniques for using bots, but it is important to understand that a bot cannot be disassociated from its use. For example, if you program a bot to carry out tests, it must be as effective as possible in its objective to quickly identify bugs. Autonomous agents, like vehicles or animals, must perform a rich, believable variety of behaviours in their virtual ecosystem, whereas a bot simulating an online player must take into account human reaction times in all its actions.

It is also important to understand that bots cannot easily be transposed from one usage to another and vital to draw a distinction between these mutually exclusive families.

Automated tests

Video games are complex computer programs. For example, a fighting game offers a wide array of options for attacking, parrying and moving around. The game’s richness is associated with the depth of the gameplay, i.e., the variety of these options, but also the possibilities for combining them. The more options a game offers, the more combinations players can make, to the extent that it becomes impossible to test them all manually and in all the game contexts. However, it is also possible that one of these combinations could spoil the gaming experience, like when a combination consistently leads to a win. In successful games, several million players play millions of rounds every day. Statistically speaking, it is quite possible that a player might stumble across such a combination and take advantage of it, or even share their discovery with other players. This is called an exploit, a bug that needs to be fixed in order to rebalance the gaming experience.

Moreover, games are now moving toward a model of gaming as an online service or platform. In other words, the game is regularly updated to adapt the experience or to add new content. Not only must this new content work, but it must also coexist with the earlier game features and not introduce any glitches that might spoil the existing gameplay.

So, what if we had super testers? Testers able to play millions of games with the sole aim of detecting exploits, sure-fire winning combinations or unbalanced design scenarios.

This is precisely the aim of automated testing bots. The idea is simple: you give a system control of the game, much as you would a player, and set a specific number of rewards (for example, when the system manages to win in fewer than a certain number of hits, or in less than a certain amount of time) and punishments (for example, when the system loses points or a game). The system then explores all the actions and combinations that will enable it to maximize its rewards by playing an extremely large number of games. This allows a game designer to set certain parameters (such as damage caused by a particular weapon), then leave the bots testing game balance to work overnight so the results can be analyzed the next day. Are there any unstoppable hits? Is overall balance maintained in the win/loss ratios?

Autonomous agents

The quality of a virtual world lies in its coherence, not its realism. In other words, every virtual world has its own rules (for example, if there’s gravity or not, how the people who inhabit it move around, etc.), and these rules must be respected in all circumstances. Breaking one of them interrupts the player experience and the player’s sense of immersion in the game.

These rules can be divided into two categories: those associated with gameplay and those associated with behaviour.

A classic example of a gameplay-associated rule is when the enemy does not see the player when they should, or conversely, when enemies seem to be able to see through walls. These issues are complex because artificial intelligence is not necessarily the cause of them. For example, an enemy may not appear to see the player because the animation representing him is problematic (the animation for his eyes, for example). Conversely, the enemy who seems to be able to see through walls might in fact have simply overheard from the adjoining room. I won’t dwell too much on this category, since there are multiple causes of conflicting perceptions and these do not solely pertain to the agent’s behaviour.

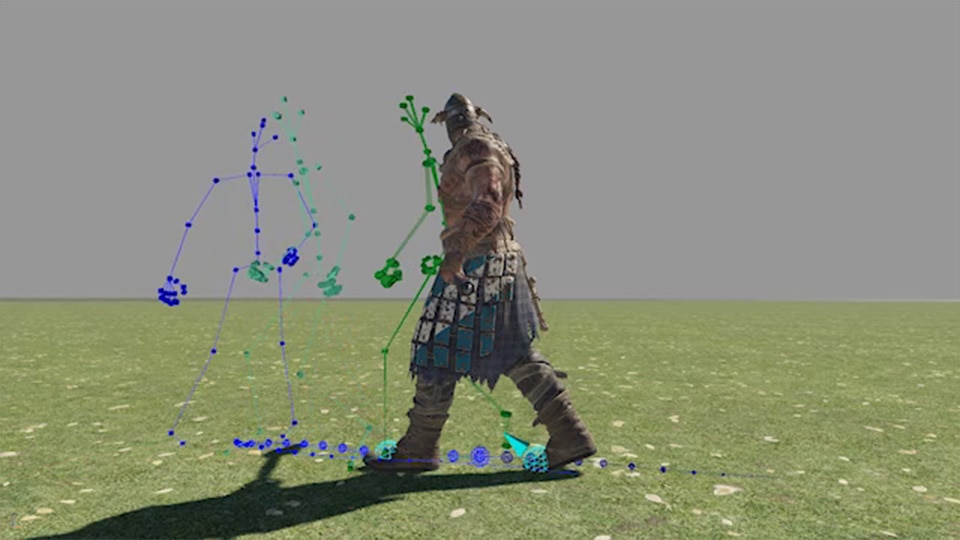

When I talk about behaviour, I am referring to how the agent interacts with the virtual world: how vehicles move, the path that agents take to move around, their ability to adapt to events occurring in the virtual world, and so on.

There is a clash of two types of logic. The first consists of programming the desired behaviour using decision trees (e.g., if this happens, then do this). The advantage of such systems is that they give the designer total control and can be fully tested. But the more situations there are, the more difficult these rules become to update and maintain. Imagine we’re programming a car that comes to an intersection. It needs to take numerous factors into account, such as whether there is a stop sign, a light, a pedestrian, another vehicle, etc. Logically, you need to program the car for all these eventualities, while also talking into account the braking distance. If you then add in another variable such as the weather, you have to account for new conditions, such as visibility, and adjust other parameters, such as braking distance. Scaling then becomes exponentially complex and you must limit the situations taken into account, which could potentially cause inconsistencies (e.g., why can the character climb onto a table but not onto the hood of a stationary car?[iii]). Worse, many contextual elements are not static but dynamic. For example, a character’s trajectory depends on other characters’ movements, which in turn depend on other factors, and so on, and so on.

One way of addressing this is to let the agent work out the optimum behaviours (those that result in the biggest reward) rather than predefining them. In our example, this would mean letting a vehicle determine the correct braking distance to allow it to reach the desired state.

We cannot, however, ignore the difficulty (and artistry) involved in creating reward features that truly represent a desired behaviour. For example, if a vehicle is rewarded when it hasn’t caused an accident and is following the rules of the road, it might appear that the optimum behaviour is to stay parked in an authorized zone. To overcome this, the reward could be made up of discreet actions (e.g., number of crashes) and continuous values (e.g., distance travelled). A large part of the researcher’s work consists of creating rewards that steer learning toward a desired behaviour while maintaining a balance between incentive to explore new strategies and using tried and tested strategies.

Virtual players

The online gaming experience depends on many different factors. One of the most important of these is the ability to play with other compatible players, i.e., players whose level is neither too high nor too low, and who aren’t too far away to ensure a good network response time (the “ping”). Depending on the game mode selected or when you want to play, the number of compatible players can vary greatly, not to mention players who may already be engaged in a game.

Worse still, what happens if a player leaves the game, voluntarily or not?

This is when bots can intervene to reproduce a player’s behaviour. The system’s objective is to reproduce a believable game style that corresponds to a player of a specific level. This last point is important, because there are two types of artificial intelligence: ones where all the information is known (i.e., chess[iv]) and ones where only part of the information is known (i.e., poker[v]). To be believable, such a system must not be seen by the player as having information, precision or a reaction time beyond that of a human player. In other words, the virtual player shouldn’t be accurate so much as fun.

Determining the rewards to train a system to be effective is complex, but determining the rewards to train a system to be fun is significantly more challenging. This is why the most believable bots are often associated with controllable environments that offer a limited depth of gameplay. More often than not, these bots are configured by manually determined heuristics that make it appear as though the bot is making decisions when in fact at least some of its actions are scripted.

For example, when a chatbot is used to provide online support, very often it asks you to describe the problem in detail, only to follow up with very general questions or answers such as “Just let me check this for you.” Most of the time, the primary objective of these responses is to give the impression of immediate support while the system tries to track down an available human. Correctly scripted questions or phrases give the impression that users are talking to a human being, provided it is a limited and well-known situation. The Turing test, which involves having a remote conversation without knowing whether you are actually talking to a human being or a machine has not yet been successfully completed, and a number of researchers are now turning their attentions to the challenge of synthesized communication (natural language processing or NLP)[vi].

The same principle applies to virtual players: the more open and varied the gameplay, the harder it is to create truly believable systems.

Bots are useful tools for assisting game developers to create more varied and credible worlds and directly improve the player experience. However, there is still a lot of work to be done to convincingly reproduce human behaviour in terms of the human ability to create, adapt and above all, surprise. It is therefore unsurprising that bots and research into them is of strategic interest to video game production, and that video games—due to their richness and complexity—provide an excellent field of exploration[vii] for autonomous agent AI-centred research.

Author: Yves Jacquier

[i] https://www.humanbrainproject.eu

[ii] For example, read Le mythe de la singularité : faut-il craindre l’intelligence artificielle? by Jean-Gabriel Ganascia, The Inevitable by Kevin Kelly, or another of Kelly’s essays here: https://www.wired.com/2017/04/the-myth-of-a-superhuman-ai/

[iii] In reality, it is a bit more complicated, as movements are controlled by the behaviour but also by the general rules of permitted movements, often dictated by a navigation mesh or navmesh. However, this clearly shows that adding a new option requires going back over the numerous rules that define possible behaviours, which involves combining behavioural questions with a .

[iv] The most well-known example of this to date is AlphaGo Zero https://deepmind.com/blog/article/alphago-zero-starting-scratch.

[v] For example, read https://www.nature.com/articles/d41586-019-02156-9.

[vi] When I access online support that uses chat software, I like to test the limits of these systems with sentences that only a human could appreciate. One of my favourites is, “Do I have time to order myself a pizza?”

[vii] For example, https://deepmind.com/blog/article/alphastar-mastering-real-time-strategy-game-starcraft-ii: the idea put forward is to demonstrate an artificial intelligence able to make optimum decisions that require long-term planning and that have a large number of possible actions, in real time and with imperfect information.