![[La Forge] Robust Motion In-betweening - keyframes](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/4sKaaxoWvdL3wph2QOeFoh/8b11fdd5bcbe6bb96863cfa4907401ae/-La_Forge-_keyframes.jpeg)

Stereotypical image of a keyframe sketch, where an animator would need to draw the in-between frames according to the timing chart on the right. Our system automates the in-betweening job. (source)

At Ubisoft, we are always trying to build evermore believable worlds. This quest for realism affects many aspects of a video game, and character movement is certainly one of those. Natural movement of characters is key to have players relate to them and making games more immersive. However, creating high quality animations is a very resource-intensive process. In this work, we present a data-driven approach, based on adversarial recurrent neural networks to automate parts of the animation authoring pipeline. It leverages high-quality Motion Capture (MOCAP) data to generate quality transitions between temporally sparse keyframes. (Paper PDF)

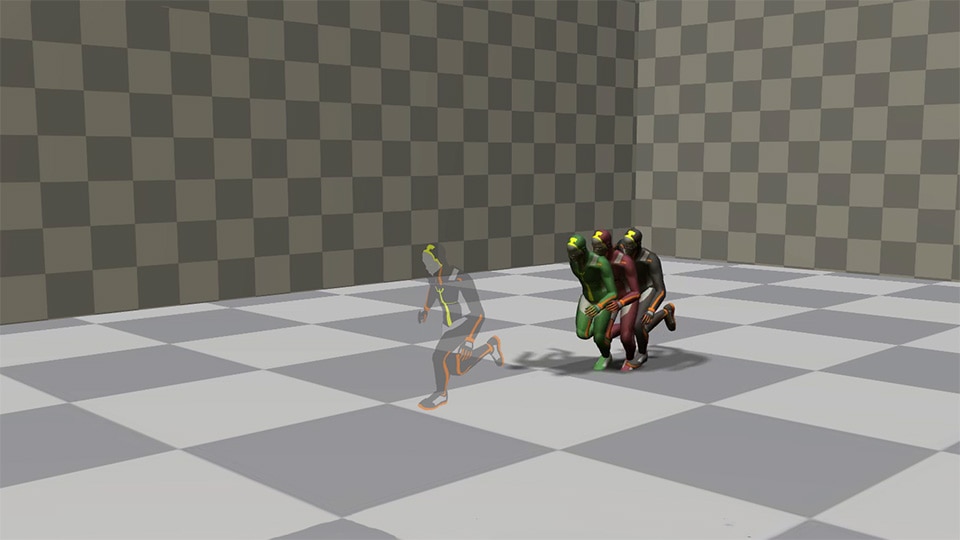

A canonical example of desired transitions in a video game. We have 2 unique keyframes (crouching and running) that have been duplicated to create a 3-segment sequence. All consecutive keyframes are one second appart, which in our case corresponds to 30 frames. The animation is generated by our model.

HOW IT’S USUALLY DONE

Motion Capture

Over the years MOCAP (for MOtion CAPture) technologies have become widely used in video games and cinema for realistic animation authoring due to their superior fidelity to re-create human movements in animations. However, MOCAP sessions as well as the needed post-processing of sequences are very ressource-intensive and avoiding new capture sessions is always desired. Re-purposing existing MOCAP for new animation needs is therefore often investigated before proceeding with a new capture. However, finding the right MOCAP sequences for specific needs is not always straightforward and modifying an existing sequence to fit a novel animation constraints can also be time-consuming.

Keyframe Animation

Keyframe, or hand-animation is another way to produce artificial movement and was for a while the main technique for drawn animations. Here again, this can be a long, tedious process in order reach natural 3D motion for AAA games. Indeed, since the current animation tools lack a high-quality in-betweening functionality for temporally sparse keyframes, animators spend a lot of time authoring many dense keyframes. Our system aims at reducing this load by allowing sparse keyframes to be automatically linked through natural motion, learned from MOCAP data. This is reminiscent of the job of in-betweening in the traditional animation pipelines where an animator is tasked with creating the motion linking keyframes provided by an artist or a senior animator.

AUTOMATIC MOTION IN-BETWEENING

Overview

Our system is designed to take as inputs up to 10 seed frames as past context, along with a target keyframe and produces the desired number of transition frames to link the past context and the target. When generating multiple transitions from several keyframes, the model is simply applied in series, using its last generated frames as past context for the next sequence.

![[La Forge] Robust Motion In-betweening - overview_combined_gray2-600x684](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/1Z6s0Zd14o6jhdr9ub4gfd/1b0949065a65257f16dad4a3f21968d3/-La_Forge-_overview_combined_gray2-600x684.png)

A visual overview of our system. At a high level, our system takes up to 10 seed frames as past context and a target keyframe to produce the missing transition in between. At a lower level, the auto-regressive, recurrent model produces one frame at a time until all missing frames are generated.

Architecture

![[La Forge] Robust Motion In-betweening - timestep1_losses-1](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/42Vu3XYLnHO1TrukbVtJcI/1e11d91bec7e5a3ef0d0eb5803dd116b/-La_Forge-_timestep1_losses-1.png)

Our architecture is based on the Recurrent Transition Networks (RTN) that we presented at SIGGRAPH Asia 2018.

It uses 3 separate feed-forward, fully connected encoders.

- The state encoder takes as input the current character pose, expressed as a concatenation of the root velocity and quaternion orientation, joint-local quaternions and feet-contact binary values.

- The target encoder takes as input the target pose, expressed as a concatenation of root orientation and joint-local quaternions.

- The offset encoder takes as input the current offset from the target keyframe to the current pose, expressed as a concatenation of linear differences between root positions and orientations, and between joint-local quaternions.

In the original RTN architecture, the resulting embeddings for each of those inputs are passed directly to an Long-Short-Term-Memory (LSTM) recurrent layer, responsible for modeling the temporal dynamics in the motion.

The output of the LSTM layer is then passed to the feed-forward decoder that will output the predicted character pose and contacts in order to compute the different loss functions.

We have an angular Quaternion Loss computed on the root and joint-local quaternions, a Position Loss computed on the global position of each joint retrieved though Forward Kinematics (see Quaternet), a Foot Contact Loss based on contacts prediction, and an Adversarial Loss.

The Adversarial Loss is obtained by training two additional feed-forward discriminator networks (or Critics) that are trained to differentiate real motion segments from generated ones. We use sliding critics that score multiple, fixed-length segments for each generated transition. Cshort-term takes segments of two frames as input, while Clong-term takes segments of 10 frames as inputs. The critics are trained with the Least-Square GAN formulation, and their scores for all segments are averaged to get the final loss.

ROBUSTNESS THROUGH ADDITIVE LATENT MODIFIERS

Time-to-arrival embeddings

![[La Forge] Robust Motion In-betweening - timestep2-600x725](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/43z5TLGVnJChZXBDMVIMOK/400db1f95c1ac3bcd231c7a246261d23/-La_Forge-_timestep2-600x725.png)

Our first latent modifier is borrowed from Transformer networks, widely used in Natural Language Processing (NLP). These attention-based models do not use any recurrent layer when processing sentences, and need some way of knowing the token sequence ordering. They use a dense, continuous vector, called a positional encoding, that evolves smoothly with the token position. Each dimension of this vector follows a sine wave with a different frequency or phase shift, making the vector unique for each position.

![[La Forge] Robust Motion In-betweening - tta_comp2-600x841](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/7BFTH2bu6VLJfYHG7yrzZO/493c8cdd678ae3568bfdb23d56badea5/-La_Forge-_tta_comp2-600x841.png)

In our work, we use the same mathematical formulation as positional encodings, but based on a time-to-arrival basis instead of token position. This time-to-arrival represents the number of frames left to generate before reaching the target keyframe. It thus pushes the input embeddings in different regions of the manifold depending on the time-to-arrival. Past a certain number of transition frames, Tmax(ztta), we also clip our embeddings so that they stay constant. This helps the model to generalize to longer transitions than seen during training, where the initial time-to-arrival embeddings would otherwise shift the input representations into unknown spaces of the latent manifold. The figure above shows visual depictions of ztta with and without Tmax(ztta))

Applying our time-to-arrival embeddings allows the neural network to handle different length of transitions for a given set of keyframes. This added flexibility is necessary for such a system to be useful for animators. We show the effects below, where only the temporal spacing of keyframes varies.

Scheduled target noise

![[La Forge] Robust Motion In-betweening - timestep3-768x928](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/1byKcxbYjjOd15S6RACzFi/5bdacdae273a5b5b75fc088c968a2e5a/-La_Forge-_timestep3-768x928.png)

In order to improve robustness to keyframe modifications as well as to enable sampling capabilities for our network, we employ a second kind of additive latent modifier that we call scheduled target noise. This vector ztarget = z * σtarget is a standard normal random vector z scaled by a stochasticity control-parameter σtarget and is sampled once per transition. It is applied on the target and offset embeddings only. We scale ztarget by a scalar λtarget that linearly decreases during the transition and reaches zero 5 frames before the target. This has the effect of distorting the perceived target and offset early in the transition while the embeddings gradually become noise-free as the motion is carried out. Since we are shifting the target and offset embeddings, this noise has a direct impact on the generated transition and allows animators to easily control the level of stochasticity of the model by specifying the value of σtarget before launching the generation. Using σtarget=0 make the system deterministic. We show the effects below, where the 3 variations per segments are sampled with σtarget = 0.5:

ADDITIONAL RESULTS

For more details and quantitative results, please look at our SIGGRAPH 2020 paper Robust Motion In-Betweening. The accompanying video shows additional qualitative results and limitations, as well as an example workflow of an animator using our system inside a custom Motion Builder plugin.

FINAL NOTES

This is work represents a considerable step towards bridging the gap between motion capture and hand-animation by bringing MOCAP quality to the keyframe-animation pipeline. Our system can help us reduce our reliance on costly capture sessions while lowering the workload of animators by augmenting common softwares’ interpolation techniques. Although we are already starting to apply this in game production, a lot of directions can still be explored to tackle and solve the remaining challenges presented by the edge cases.

More Animation Research from Ubisoft La Forge

- We recently released the Ubisoft LaForge Animation Dataset LaFAN1, which was used to train our models for the paper.

- Ubisoft La Forge’s animation team also published at SIGGRAPH 2020 a paper on Learned Motion Matching, in which they solve the memory scalability issues of regular Motion Matching and use parts of the LaFAN1 dataset.

- Making Machine Learning Work: From Ideas to Production Tools

.

BibTex

@article{harvey2020robust,

author = {Félix G. Harvey and Mike Yurick and Derek Nowrouzezahrai and Christopher Pal},

title = {Robust Motion In-Betweening},

booktitle = {ACM Transactions on Graphics (Proceedings of ACM SIGGRAPH)},

publisher = {ACM},

volume = {39},

number = {4},

year = {2020}

}